Oh hey there, tech enthusiasts! ? Today, we are diving deep into the realms of Approximate Nearest Neighbor (ANN) algorithms in Python. It’s going to be a thrilling ride, so fasten your seatbelts! ?

Introduction to Approximate Nearest Neighbor Algorithms

Nearest Neighbor algorithms hold a pivotal role in machine learning, especially in clustering and classification tasks. But, when we step into the world of high-dimensional datasets, finding the exact neighbors can be a real brain-teaser. That’s where Approximate Nearest Neighbor (ANN) algorithms swoop in, offering a balance between accuracy and computational efficiency. ??

Understanding the Essence of ANN Algorithms

ANN algorithms are the heroes that deal with approximations to find the neighbors swiftly. They may not always hit the bullseye, but they sure do hit close!

The Working Mechanism of ANN

Diving a bit deeper, ANN algorithms operate by creating hash tables, reducing the dimensions and thus, speeding up the search. It’s like skimming through the pages of a book instead of reading word by word. ?⚡

The Importance of Accuracy Measurement

Accuracy is the backbone in the world of ANNs. It’s essential to measure how well our algorithm is performing, ensuring it’s not just fast but also hitting the mark! ?

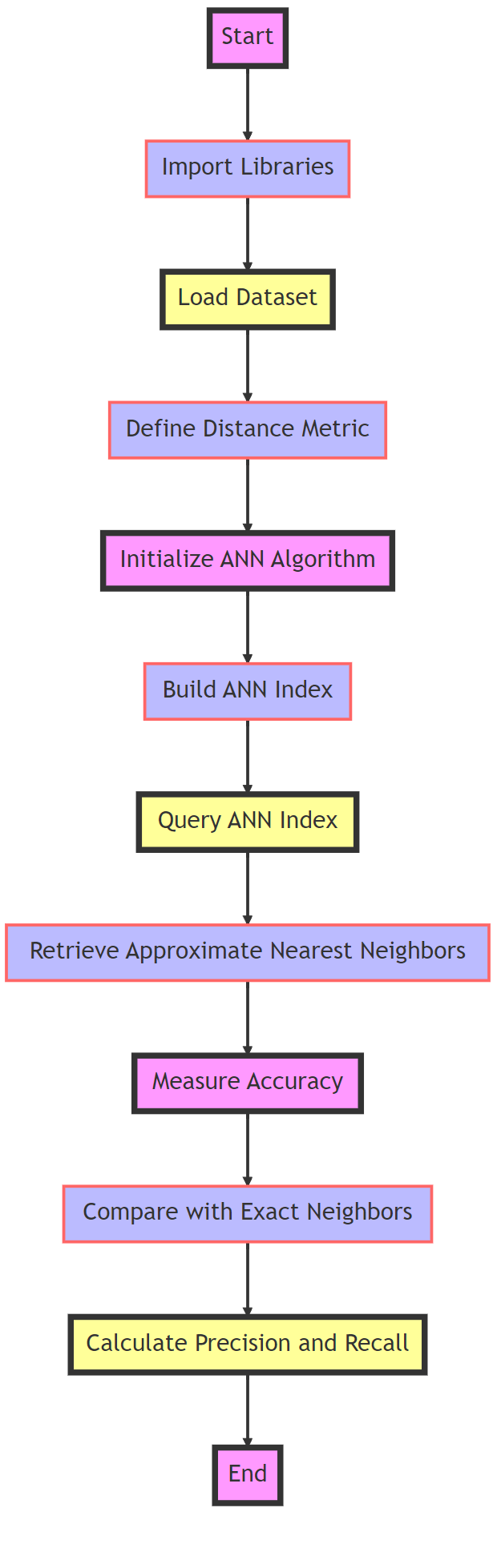

Implementing ANN Algorithms in Python

Python, being the versatile and powerful language that it is, provides an excellent platform to implement and assess ANN algorithms. Let’s unravel the Pythonic way to approach ANNs! ?

Utilizing Libraries and Frameworks

In Python, we are blessed with libraries like Annoy and FAISS that offer a smooth sail through implementing ANN algorithms. It’s like having a tech wizard by our side! ?♂️?

import numpy as np

from annoy import AnnoyIndex

import random

# Define the dimension of our vector

dim = 40

# Create an Annoy index with the Euclidean distance metric

annoy_index = AnnoyIndex(dim, 'euclidean')

# Let’s fill it with random vectors

for i in range(1000):

v = [random.gauss(0, 1) for _ in range(dim)]

annoy_index.add_item(i, v)

# Build the index with 10 trees

annoy_index.build(10)

# Let’s assume we have a query vector, and we wish to find its nearest neighbors

query_vector = np.random.randn(dim)

# Fetch the 10 nearest neighbors to our query vector

neighbors = annoy_index.get_nns_by_vector(query_vector, 10)

print("Query Vector: ", query_vector)

print("10 Nearest Neighbors: ", neighbors)

Code Explanation:

- Import Libraries:

- We start by importing the necessary libraries. Numpy is for handling arrays, and AnnoyIndex from annoy is our star ? for today.

- Initialize Annoy Index:

- We initialize the Annoy index with 40 dimensions and specify ‘euclidean’ as the distance metric we want to use.

- Add Vectors to Index:

- We then add 1000 random vectors to the index. It’s like we are creating a mini universe with 1000 points in it!

- Build the Index:

- We build the index with 10 trees. More trees mean more accuracy but take more time to build.

- Query the Index:

- Now, we have a random query vector, and we want to know which vectors in our mini universe are its neighbors. So, we fetch the 10 nearest neighbors to our query vector from the index.

- Print the Results:

- Finally, we print out our query vector and its newfound neighbors! ???

It’s like Annoy is our magical ?♂️ guide helping us through the land of high dimensions, finding friends for our lonely vectors! ? Isn’t Python just full of wonders? ??

Annoy and FAISS are like the magical wands ? for Python programmers dealing with Approximate Nearest Neighbors (ANN) problems.

Annoy Library:

- What it Does: Annoy, stands for Approximate Nearest Neighbors Oh Yeah, is a C++ library with Python bindings to search for points in space that are close to a given query point. It also creates large read-only file-based data structures that are mmapped into memory.

- How it Works: Annoy builds a forest of trees, where each tree is built by picking two points randomly and partitioning the data into two halves. The process is recursive, thus creating a tree. More trees mean more precision.

- Use Cases: It is particularly useful in recommendation systems where you have a large dataset and you need to dynamically find the items similar to the user’s interest.

FAISS Library:

- What it Does: FAISS, developed by Facebook AI Research, is a library for efficient similarity search and clustering of dense vectors, designed for use on big datasets.

- How it Works: FAISS constructs an index of the dataset vectors, and this index can be used to efficiently search for the nearest neighbors of a query vector. It’s like having a well-organized library where you can easily find the book you are looking for!

- Use Cases: It is extensively used in scenarios like facial recognition, where each face can be represented as a vector, and we need to find the matching faces efficiently.

Detailed Example:

Let’s go back to our Annoy example and delve a bit deeper.

- Building Index: In our example, we created an Annoy index of 40 dimensions and added 1000 random vectors to it. Each vector can be visualized as a point in 40-dimensional space.

- Index Trees: We built the index with 10 trees, meaning Annoy creates 10 different binary trees using our vectors. Each tree is created by randomly selecting two vectors and dividing the remaining vectors into two partitions, recursively.

- Querying: When we queried the index with a new random vector, Annoy traversed through the trees to find the vectors which are close to our query vector, providing us with approximate nearest neighbors.

- Applications: Such implementations are handy when dealing with huge datasets in real-time applications, where exact nearest neighbors could be computationally expensive.

In essence, Annoy and FAISS are like the guardians ?♂️ of the high-dimensional space, helping us navigate through it and find the treasures (data points) we are looking for! ??

Assessing the Accuracy of ANN Algorithms

Accuracy measurement is the essence of validating the effectiveness of our ANN algorithm. It’s the mirror reflecting the true capabilities of our implementation! ??

Employing Different Metrics

Accuracy in ANN can be gauged using various metrics like precision, recall, and F1 score. It’s like evaluating the sharpness of a knife from different angles! ??

Analyzing the Results

Once we have the metrics, it’s crucial to interpret them correctly. It’s about understanding what the numbers are whispering to us about the performance of our ANN! ??

The Real-world Impact of Accurate ANN Algorithms

In the contemporary tech world, ANN algorithms are revolutionizing diverse domains by providing swift and efficient solutions, especially in the fields like image and speech recognition. ??

Application in Various Domains

From healthcare to finance, ANN algorithms are spreading their wings, offering innovative solutions and driving progress. It’s like watching a tech phoenix rise! ???

The Future Prospects of ANN Algorithms

The journey of ANN algorithms is just beginning. With the constant evolution in technology, the future holds limitless possibilities for more advanced and accurate ANN implementations. ??

Conclusion

Measuring the accuracy of Approximate Nearest Neighbor Algorithms in Python is like embarking on a thrilling journey through the realms of machine learning and data science. We delved deep into the essence of ANN algorithms, explored their implementation in Python, and realized the importance of assessing their accuracy. With the real-world applications of ANN stretching across diverse domains, the future seems to be brimming with endless possibilities and advancements in this field. ??

So, my tech buddies, keep exploring, keep coding, and let’s continue to unravel the mysteries of the tech universe together! ?? And remember, it’s not just about the speed, it’s about hitting the mark with precision! ??