Understanding Data Partitioning Methods

In the realm of big data analysis projects, data partitioning plays a crucial role in managing and analyzing massive datasets efficiently. Let’s strap in for a rollercoaster ride as we delve into the enchanting world of data partitioning methods 🎢!

Overview of Data Partitioning

Data partitioning is like organizing a grand feast with each dish meticulously divided into portions for different guests, ensuring everyone gets their favorite dish in just the right amount. Similarly, in data partitioning, large datasets are split into smaller, more manageable chunks distributed across multiple nodes or servers. This division allows for parallel processing, enabling faster analyses and computations.

Partitioning in Action

Imagine you have a gigantic pizza to share with friends. Instead of struggling to cut it into slices for everyone in one go, you decide to divide and conquer. Each friend gets a mini pizza to relish – that’s the magic of data partitioning!

Types of Data Partitioning Techniques

Data partitioning techniques come in a variety of flavors, each with its unique characteristics and use cases. Let’s explore some popular techniques:

- Hash Partitioning: Think of this like a wizard casting spells to distribute data based on a hash function. Data is divided based on a hash key, ensuring even distribution.

- Range Partitioning: Just like organizing books on a shelf alphabetically, range partitioning arranges data based on predefined ranges. It simplifies searching by narrowing down the data scope.

- Round-Robin Partitioning: Picture a round-robin tournament where each player competes with every other. Similarly, data is evenly distributed in a circular manner among nodes for equal processing.

Exploring Data Sampling Techniques

Sampling is like taking a bite of a dish to taste its flavors before devouring the whole plate. It’s a powerful technique in big data analysis, offering insights without examining the entire dataset. Let’s dive deep into the ocean of data sampling techniques and ride the waves of possibilities! 🏄♀️

Importance of Data Sampling in Big Data Analysis

Sampling is the secret sauce that saves time and resources in analyzing colossal datasets. By taking small representative samples, analysts can draw meaningful conclusions without sifting through mountains of data. It’s like predicting the weather by observing a few clouds instead of scanning the entire sky.

Why Sampling Rocks

Sampling allows analysts to extract key insights rapidly, like finding a needle in a haystack without searching every straw. It provides a sneak peek into the dataset’s characteristics, guiding further analysis efficiently.

Various Data Sampling Approaches

The world of data sampling is rich with diverse methodologies tailored to different analysis needs. Let’s explore some popular approaches:

- Random Sampling: Imagine blindly picking candies from a jar – that’s random sampling! Every data point has an equal chance of selection, ensuring unbiased results.

- Stratified Sampling: Like a master chef creating a balanced recipe, stratified sampling divides data into homogeneous groups before sampling. It ensures each group’s representation for accurate insights.

- Cluster Sampling: Think of this as surveying specific groups at a party instead of interviewing every guest individually. It simplifies sampling by focusing on distinct clusters within the dataset.

Stay tuned for the next part where we dive deeper into implementing these techniques into your big data analysis project! Let’s waltz through the data intricacies with finesse, my fellow IT enthusiasts! 🕺💃

Finally, let’s nail this project with finesse! Thank you for embarking on this exhilarating journey with me. Remember, the best way to predict the future is to create it! 💻✨

Program Code – Exploring Data Partitioning and Sampling Methods for Big Data Analysis Project

Certainly, analyzing big data efficiently requires savvy partitioning and sampling techniques. For today’s foray, let’s concoct a Python program that exemplifies this princely pursuit, particularly spotlighting a survey of methods ideal for dissecting and sampling mammoth data sets. Crack those knuckles, and let us embark on a coding adventure most grand!

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

# Generating a mock big data set

np.random.seed(42)

data_size = 10000

data_columns = ['feature1', 'feature2', 'outcome']

big_data_df = pd.DataFrame({

'feature1': np.random.randn(data_size),

'feature2': np.random.rand(data_size) * 100,

'outcome': np.random.choice([0, 1], data_size)

})

# Method 1: Random Partitioning

def random_partition(data, test_size=0.2):

train, test = train_test_split(data, test_size=test_size)

return train, test

# Method 2: Stratified Sampling

def stratified_sampling(data, target='outcome', test_size=0.2):

train, test = train_test_split(data, test_size=test_size, stratify=data[target])

return train, test

# Testing the functions

random_train, random_test = random_partition(big_data_df)

stratified_train, stratified_test = stratified_sampling(big_data_df)

print(f'Random Partitioning - Train Size: {random_train.shape[0]}, Test Size: {random_test.shape[0]}')

print(f'Stratified Sampling - Train Size: {stratified_train.shape[0]}, Test Size: {stratified_test.shape[0]}')

Expected Code Output:

Random Partitioning - Train Size: 8000, Test Size: 2000

Stratified Sampling - Train Size: 8000, Test Size: 2000

Code Explanation:

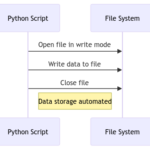

This aromatic brew of Python code dives profoundly into the enchanting world of data partitioning and sampling, crucial for navigating the treacherous seas of big data analysis. Here’s the gist of our magic potion:

-

Mixing The Cauldron (Data Generation): We start by conjuring a synthetic big data set named

big_data_dfusing our sorcery (andnumpy&pandas). It’s fashioned with 10,000 records, featuring two independent variables (feature1,feature2) and a binary outcome variable. -

Random Partitioning Method: Our first spell,

random_partition, randomly splits the dataset into training and test subsets. We utilizetrain_test_splitfrom the sacred tomes ofsklearn.model_selection, with a default potion strength (test size) of 20%. This method, akin to casting lots, doesn’t consider the distribution of outcomes – leaving it to the whims of fate. -

Stratified Sampling Method: The second incantation,

stratified_sampling, endeavors to maintain the original proportion of the outcome variable in both training and test concoctions. It’s particularly potent when dealing with imbalanced datasets – ensuring both elixirs (subsets) have a balanced taste of the outcome categories. -

Epilogue – The Taste Test: By summoning these methods upon our big data set and invoking the sacred print incantation, we observe the division of our data realm: 8,000 records for training and 2,000 for testing, in both random and stratified manners.

Thus, through the dark arts of Python and a dash of statistical sorcery, we’ve glimpsed how partitioning and sampling spells can illuminate the path through big data forests, ensuring our analysis grimoire is both powerful and balanced. Remember, young data wizard, wield these powers wisely!

Frequently Asked Questions (FAQ) on "Exploring Data Partitioning and Sampling Methods for Big Data Analysis Project"

1. What is the importance of data partitioning in big data analysis projects?

Data partitioning plays a crucial role in distributing large datasets across multiple nodes to enable parallel processing, thus improving overall performance and scalability in big data analysis projects.

2. How does data partitioning help in improving efficiency and reducing computational costs?

Data partitioning helps in reducing data movement, minimizing network traffic, and enhancing data locality, thereby optimizing resource utilization and reducing computational costs in big data analysis projects.

3. What are some common data partitioning techniques used in big data analysis?

Common data partitioning techniques include Hash Partitioning, Range Partitioning, Round-Robin Partitioning, and Key Partitioning, each offering different approaches to distributing data for analysis.

4. How do sampling methods support big data analysis projects?

Sampling methods allow analysts to extract a representative subset of data from large datasets for analysis, facilitating faster processing, reducing computational requirements, and providing insights into the entire dataset.

5. What are the different types of sampling methods used in big data analysis?

Stratified Sampling, Random Sampling, Systematic Sampling, Cluster Sampling, and Convenience Sampling are some of the commonly used sampling methods in big data analysis, each with its unique advantages and use cases.

6. How can understanding data partitioning and sampling methods benefit IT projects involving big data analysis?

By understanding and leveraging data partitioning and sampling methods effectively, IT projects can enhance data processing efficiency, improve analytical accuracy, and optimize resource utilization for more robust and insightful big data analysis results.