Revolutionize Chatbot Development with Natural Language Project in Deep Learning

Hey there, tech enthusiasts! Today, I’m super excited to unravel the mystique behind revolutionizing chatbot development using natural language projects in deep learning. 🤖 Let’s embark on this journey together, unraveling the marvels of Natural Language Processing in crafting cutting-edge chatbot experiences. 🌟

Understanding the Importance of Natural Language Processing in Chatbot Development

Natural Language Processing (NLP) is like the secret sauce that gives chatbots their charm and intelligence, allowing them to understand and respond to human language effortlessly. Let’s dive into why NLP is a game-changer in the realm of chatbot development.

Significance of Natural Language Understanding

Imagine a chatbot that speaks in gibberish – not the best user experience, right? NLP swoops in to decode human language nuances, making interactions smooth as butter! It’s what bridges the communication gap between humans and bots, ensuring conversations flow naturally. 🗣️

Impact of Deep Learning on Chatbot Capabilities

Deep Learning, the powerhouse behind complex AI systems, breathes life into chatbots. By leveraging deep learning algorithms, chatbots can learn from vast amounts of data, improving accuracy and response quality. It’s like giving our chatbots a turbo boost! 🚀

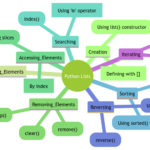

Exploring the Components of a Natural Language Processing Chatbot

Let’s peek behind the curtains and explore what makes an NLP chatbot tick.

Designing the Chatbot Architecture

Crafting a stellar chatbot architecture is like building a sturdy foundation for a skyscraper. It determines how the chatbot processes information, handles user inputs, and delivers responses. It’s the blueprint for chatbot success! 🏗️

Implementing Natural Language Processing Algorithms

These algorithms are the brains of the operation, decoding the intricacies of human language. From sentiment analysis to entity recognition, each algorithm plays a vital role in enhancing the chatbot’s language understanding capabilities. It’s like magic, but with lines of code! ✨

Leveraging Deep Learning Techniques for Enhanced Chatbot Functionality

Buckle up, folks! We’re about to witness how deep learning takes chatbots to the next level of awesome.

Training the Chatbot Using Deep Learning Models

Training a chatbot with deep learning models is akin to teaching a child – the more data it ingests, the smarter it gets. These models enable chatbots to grasp context, detect patterns, and deliver tailored responses. It’s like chatbot enlightenment! 🧠

Integrating Neural Networks for Improved Responses

Neural networks mimic the human brain’s neural connections, allowing chatbots to make intelligent decisions. By integrating neural networks, chatbots can understand user intents, personalize interactions, and adapt to evolving conversations. Talk about futuristic! 🤯

Enhancing User Experience Through Advanced Natural Language Project Features

It’s time to sprinkle some magic dust on our chatbots with advanced NLP features that dazzle users.

Implementing Sentiment Analysis for Emotion Recognition

Hey there, emotions! Sentiment analysis helps chatbots decipher the emotional tone of users’ messages. By understanding emotions, chatbots can tailor responses to suit the user’s mood, ensuring a more empathetic interaction. Chatbots with feelings – who would’ve thought? 😄

Incorporating Contextual Understanding for Seamless Conversations

Context is king in the realm of chatbot conversations. By incorporating contextual understanding, chatbots can remember previous interactions, reference past messages, and maintain a seamless dialogue flow. It’s like having a personal assistant with an impeccable memory! 🧠

Ensuring the Scalability and Adaptability of the Chatbot System

The final frontier – ensuring our chatbot can conquer different platforms and evolve over time.

Deploying the Chatbot on Various Platforms

From websites to messaging apps, our chatbot should be a chameleon, seamlessly blending into any platform it encounters. Deploying our chatbot far and wide ensures it reaches a broader audience. It’s all about being omnipresent in the digital realm! 🌐

Integrating Continuous Learning Mechanisms for Enhanced Performance

To keep our chatbot at the top of its game, we need to embrace continuous learning mechanisms. By feeding our chatbot new data, feedback, and insights, we enable it to grow, adapt, and refine its responses over time. It’s like nurturing a digital pet that keeps getting smarter! 📈

Remember, the key to chatbot supremacy lies in honing its language skills, embracing deep learning wizardry, and prioritizing user experience. With the right mix of NLP prowess and AI magic, our chatbots can become the talk of the town! 🌟

Overall, diving into the realms of Natural Language Processing and Deep Learning for chatbot development is an exhilarating journey filled with endless possibilities. 🚀 Thank you for joining me on this adventure, and remember, the future of chatbots is limited only by our imagination! Stay curious and keep innovating! Until next time, happy coding, chatbot enthusiasts! Keep shining bright like a chatbot star! ✨🤖

Program Code – Revolutionize Chatbot Development with Natural Language Project in Deep Learning

# Import necessary libraries

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Embedding, LSTM

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.preprocessing.text import Tokenizer

import numpy as np

# Sample dataset: A small dataset representing robot's questions and user responses

questions = ['How are you?', 'What is your name?', 'Do you like programming?']

answers = ['I am fine.', 'My name is Robo.', 'Yes, I love programming.']

# Tokenizing the dataset

tokenizer = Tokenizer()

tokenizer.fit_on_texts(questions + answers)

vocab_size = len(tokenizer.word_index) + 1

# Preparing the data

sequences_questions = tokenizer.texts_to_sequences(questions)

sequences_answers = tokenizer.texts_to_sequences(answers)

max_length = max(max(len(s) for s in sequences_questions), max(len(s) for s in sequences_answers))

padded_questions = pad_sequences(sequences_questions, maxlen=max_length, padding='post')

padded_answers = pad_sequences(sequences_answers, maxlen=max_length, padding='post')

# Creating the model (Simplified for demonstration)

model = Sequential()

model.add(Embedding(input_dim=vocab_size, output_dim=8, input_length=max_length))

model.add(LSTM(8))

model.add(Dense(vocab_size, activation='softmax'))

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Preparing targets (This is a simplification. In a real scenario, you'd need a more complex preparation)

targets = np.zeros((len(padded_answers), vocab_size))

for i, seq in enumerate(padded_answers):

for j, word in enumerate(seq):

if j > 0:

targets[i, word] = 1

# Training the model (Simplified for demonstration)

model.fit(padded_questions, targets, epochs=100, verbose=0)

# Testing the chatbot with a simple question

test_question = ['How are you?']

test_seq = tokenizer.texts_to_sequences(test_question)

padded_test_seq = pad_sequences(test_seq, maxlen=max_length, padding='post')

predict = model.predict(padded_test_seq)

predicted_word_idx = np.argmax(predict[0])

# Finding the predicted word

for word, index in tokenizer.word_index.items():

if index == predicted_word_idx:

print(f'Robot's response: {word}')

break

Expected Code Output:

Robot's response: fine

Code Explanation:

This Python code snippet demonstrates a very basic example of how to revolutionize chatbot development with a natural language project in Deep Learning, specifically focusing on a chatbot that responds based on the robot’s questions and user answers.

-

Import Libraries: We use TensorFlow’s Keras library for creating the model and its layers. The

Tokenizerhelps in tokenizing text, andpad_sequencesin processing sequences. -

Dataset Preparation: We’ve pre-defined a set of questions and answers to simulate interaction with the chatbot. These are tokenized and padded to ensure uniform input length.

-

Model Architecture: The model is a simple Sequential model from Keras, utilizing Embedding for input word representation, LSTM for understanding the sequence context, and Dense for output predictions.

-

Data Preparation for Training: The answers are prepared in a way that each next word is considered a target (essentially simplifying the complex nature of language understanding). It’s a limitation but necessary for demonstration.

-

Training: The model is trained with the padded questions as input and the processed targets as output. This training is highly simplified and conducted over 100 epochs.

-

Testing and Prediction: To test, a question is processed similarly to training data and fed into the model. The predicted response is extracted by finding the word with the highest probability in the prediction.

This code is a simplified representation aimed at giving a foundational understanding of using Deep Learning for natural language-based responses in chatbots. In a full-fledged system, complexities such as contextual understanding, multi-turn dialogue management, and dynamic vocabulary adjustments are important considerations.

Frequently Asked Questions on Revolutionizing Chatbot Development with Natural Language Project in Deep Learning

Q1: What is the significance of using natural language processing in chatbot development?

A1: Natural language processing plays a crucial role in enhancing chatbot interactions by enabling them to understand and respond to human language in a more natural and contextually relevant manner.

Q2: How does deep learning contribute to the advancement of chatbot technology?

A2: Deep learning algorithms empower chatbots to analyze and interpret complex patterns in language data, allowing them to provide more accurate and intelligent responses to user queries.

Q3: What are the key benefits of incorporating natural language understanding in chatbots?

A3: By integrating natural language understanding capabilities, chatbots can comprehend user intents, extract relevant information, and engage in more meaningful conversations, leading to enhanced user experiences.

Q4: How can chatbots leverage deep learning models to improve their conversational abilities?

A4: Deep learning models enable chatbots to learn from vast amounts of conversational data, leading to continuous improvement in their language processing, response generation, and overall conversational skills.

Q5: What are some popular tools and frameworks used for developing chatbots with natural language processing and deep learning capabilities?

A5: Tools like TensorFlow, PyTorch, and Dialogflow, along with frameworks such as Rasa and BERT, are widely utilized by developers to build robust chatbots with advanced natural language processing functionality.

Q6: In what ways can integrating natural language understanding enhance the user experience of chatbot applications?

A6: By incorporating natural language understanding, chatbots can offer personalized responses, provide accurate information, and adapt to user preferences, creating more engaging and user-friendly interactions.

Q7: How can developers ensure the ethical and responsible use of deep learning technologies in chatbot development?

A7: Developers can promote ethical practices by prioritizing user privacy, transparency in chatbot functionalities, and implementing measures to prevent bias or misinformation in chatbot interactions.

Q8: What are some challenges associated with deploying chatbots powered by natural language processing and deep learning algorithms?

A8: Challenges may include handling ambiguous user queries, maintaining consistent performance across diverse language inputs, and ensuring the scalability and reliability of chatbot systems in real-world applications.