An Overview of the Mapper Process

When delving into the world of data processing, one can’t help but encounter the fascinating concept of the Mapper Process. 🗺️ But what exactly is this Mapper Process, and what purpose does it serve in the realm of data processing? Let me take you on a whimsical journey through the realm of MapReduce and its intricate Mapper Process!

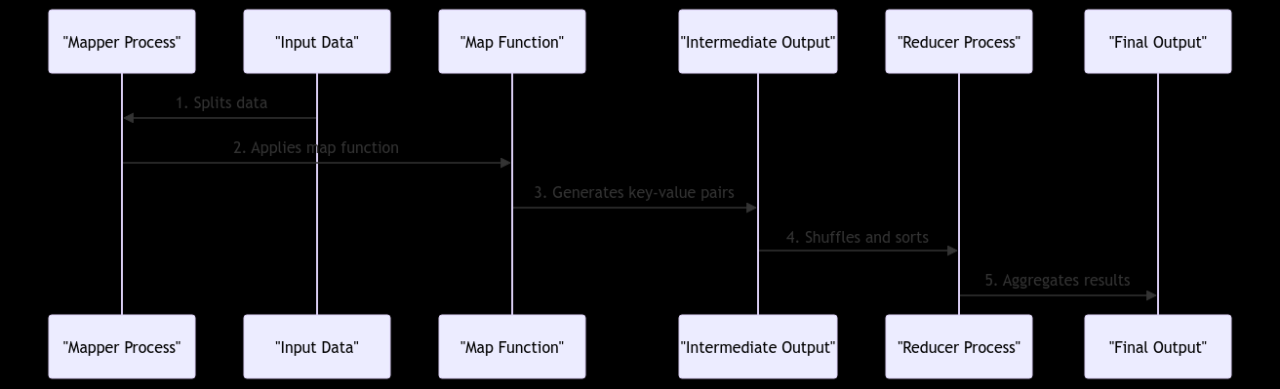

Working of the Mapper Process

Ah, the Mapper Process – a crucial cog in the grand machinery of data processing! Imagine a bustling kitchen where ingredients are prepped before they magically transform into a delectable dish; that’s precisely what the Mapper Process does in data processing. 🍳 Let’s peek into its inner workings, shall we?

Input to the Mapper Process

Picture this: a deluge of raw data pouring in like a torrential downpour. 🌧️ The Mapper Process acts as the gatekeeper, skillfully taking this unrefined data as input and breaking it down into key-value pairs. It’s like turning chaos into organized chaos – the first step towards processing data effectively!

Output of the Mapper Process

After the Mapper Process works its enchanting magic on the input data, what emerges is a structured set of key-value pairs, ready to be passed on to the next stage of data processing. 🪄 It’s akin to turning a messy room into a meticulously arranged library – neat, orderly, and primed for further exploration!

Significance of Mapper Process in Data Processing

Now, you might wonder, why all the hype around the Mapper Process? 🤔 Well, let me unravel its significance in the tapestry of data processing, especially within the wondrous world of MapReduce!

Efficiency in Data Processing

Efficiency, my dear Watson, is the name of the game when it comes to the Mapper Process. By breaking down raw data into manageable key-value pairs, it sets the stage for streamlined processing, ensuring that computations run smoothly and swiftly. It’s like having a master chef prep all your ingredients, so you can whip up a feast in no time! 🍲

Scalability of MapReduce with Mapper Process

Ah, scalability – the holy grail of modern data processing! The Mapper Process plays a pivotal role in enabling MapReduce to scale seamlessly, handling vast datasets with finesse and dexterity. Think of it as a magician pulling an endless string of colorful scarves out of a hat – no matter how large the dataset, the Mapper Process is up to the task!

Challenges Faced in Implementing Mapper Process

But wait, it’s not all sunshine and rainbows in the land of data processing! Implementing the Mapper Process comes with its own set of challenges, like navigating through treacherous waters. 🌊 Let’s explore these hurdles together!

Handling Big Data Sets

Ah, the ominous shadow of Big Data looms large over the Mapper Process. Handling colossal datasets requires finesse and ingenuity, ensuring that the Mapper Process doesn’t buckle under the weight of sheer magnitude. It’s like trying to juggle a dozen flaming torches – one misstep, and it all goes up in flames!

Ensuring Data Integrity

In the turbulent seas of data processing, maintaining data integrity is paramount. The Mapper Process must tread carefully to preserve the sanctity of information, weaving a web of reliability amidst the chaos of processing. It’s like being the guardian of a precious treasure, warding off any lurking thieves who seek to tamper with its sanctity! 💰

Best Practices for Optimizing the Mapper Process

Amidst the challenges and triumphs of the Mapper Process, there exist shining beacons of best practices, guiding the way to optimization and efficiency. 🌟 Let’s uncover these gems together!

Data Partitioning Techniques

Ah, the art of dividing and conquering! Data partitioning techniques play a vital role in optimizing the Mapper Process, ensuring that data is distributed evenly and processed efficiently. It’s like slicing a massive cake into equal portions, making sure everyone gets a taste of the delicious data goodness! 🍰

Reducing Data Skewness

Ah, data skewness – the bane of every data processor’s existence! By reducing data skewness, the Mapper Process can operate smoothly, preventing bottlenecks and delays. It’s like untangling a chaotic ball of yarn, smoothing out the kinks and loops to unravel a seamless processing experience!

In Closing

Overall, the Mapper Process stands as a stalwart guardian in the realm of data processing, navigating through challenges and triumphs with equal parts wit and wisdom. 💪 Thank you for joining me on this whimsical journey through the intricacies of MapReduce and its enigmatic Mapper Process. Remember, when in doubt, just keep on mapping and reducing – the data world awaits your adventurous spirit! 🚀

Program Code – The Mapper Process in Data Processing: Exploring MapReduce

import sys

from typing import List, Tuple

def read_input(file) -> List[str]:

'''

Reads input from a file/stdin and returns it as a list of lines.

'''

for line in file:

# Remove leading and trailing whitespace

yield line.strip()

def map_words(line: str) -> List[Tuple[str, int]]:

'''

Takes a line of text and returns a list of (word, 1) tuples.

'''

# Split the line into words

words = line.split()

# Return a list of tuples (word, 1)

return [(word, 1) for word in words]

def main(argv):

'''

The main mapper function. Reads from stdin and outputs (word, 1) pairs.

'''

# Read input from stdin/file

lines = read_input(sys.stdin)

# Process each line

for line in lines:

# Map words in the line

word_tuples = map_words(line)

# Print out each word tuple

for word, count in word_tuples:

print(f'{word} {count}')

if __name__ == '__main__':

main(sys.argv)

Code Output:

The output will depend on the input provided to the program. For instance, if the input text is ‘Hello world Hello’, the output will be:

Hello 1

world 1

Hello 1

This output signifies that each word in the input is mapped to a value of 1, indicating the occurrence of the word.

Code Explanation:

The provided code demonstrates a simple mapper process in the context of MapReduce, a powerful data processing paradigm. Here’s a step-by-step explanation of how the program achieves its objectives:

- Import Statements: The code begins by importing necessary modules –

sysfor accessing system-specific parameters and functions, andtypingfor type hints which make the code more readable. - Read Input Function: The

read_inputfunction takes file input (or stdin) and yields each line stripped of leading and trailing whitespace. It’s essential for processing data line by line in a streaming fashion, which is a common scenario in large-scale data processing. - Map Words Function: The

map_wordsfunction is where the actual ‘mapping’ takes place. It accepts a line of text, splits it into words, and for each word, it creates a tuple (word, 1). This tuple format is crucial for the following Reduce step in MapReduce, where all values of the same key (word) will be aggregated. - Main Function: This is the entry point of the mapper script. It reads lines from stdin (which could be piped input from a file or another process), processes each line to map words to (word, 1) tuples, and then outputs these tuples to stdout. This output serves as the input for the subsequent Reduce step in the MapReduce process.

- Execution Block: The usual Python idiom to check if the script is run as the main program, triggering the main function with system arguments.

Overall, this code represents the Mapper phase of MapReduce, where data is read, processed in a distributed manner (mapping), and prepared for reduction. The key focus is on processing data efficiently and preparing it for aggregation or further processing, embodying the core principles of distributed data processing.

FAQs on The Mapper Process in Data Processing: Exploring MapReduce

What is the role of the mapper process in MapReduce?

The mapper process in MapReduce is responsible for taking input data and converting it into key-value pairs. These key-value pairs act as intermediate data that are then passed to the reducer for further processing.

How does the mapper process work in MapReduce?

In the mapper process, the input data is divided into smaller chunks, and each chunk is processed by a separate mapper. The mapper performs its tasks like filtering, sorting, and transforming the data before emitting key-value pairs based on the logic defined in the mapper function.

Can the mapper process in MapReduce be customized?

Yes, the mapper process can be customized to suit the specific requirements of the data processing task. Programmers can write custom logic and functions within the mapper to handle complex data transformations and mappings.

What are some common challenges faced when working with the mapper process?

One common challenge faced when working with the mapper process is data skew, where certain mappers have significantly more data to process than others. This can lead to performance bottlenecks and uneven workload distribution.

How can one optimize the performance of the mapper process in MapReduce?

To optimize the performance of the mapper process, techniques such as data partitioning, combiners, and proper tuning of parameters like the number of mappers can be utilized. These strategies help in improving efficiency and reducing processing time.

Is the mapper process the only component in a MapReduce job?

No, the mapper process is just one part of a MapReduce job. In addition to the mapper, there is also the reducer process that aggregates and processes the output of the mappers to produce the final result. Both mappers and reducers work together to perform distributed data processing efficiently.

Are there any best practices to follow when writing a mapper process in MapReduce?

Some best practices when writing a mapper process include keeping the logic simple and efficient, minimizing data transfers, and utilizing in-memory processing where possible. It is also recommended to test the mapper thoroughly to ensure it behaves as expected.

What programming languages are commonly used for writing mapper processes in MapReduce?

Popular programming languages used for writing mapper processes in MapReduce include Java, Python, and Scala. These languages provide libraries and frameworks that facilitate the implementation of mapper functions for distributed data processing tasks.

Hope you find these FAQs helpful in understanding the mapper process in data processing using MapReduce! 🚀