Unraveling Conditional Random Fields in Machine Learning

Hey there, all you wonderful readers! Today, I’m diving into the fascinating world of Conditional Random Fields. 🧐 Let’s embark on this exciting journey together as we explore this quirky corner of the machine learning universe! 🚀

Understanding Conditional Random Fields

Hey, so what on earth are these Conditional Random Fields, you might be wondering? Well, let me break it down for you in the simplest way possible!

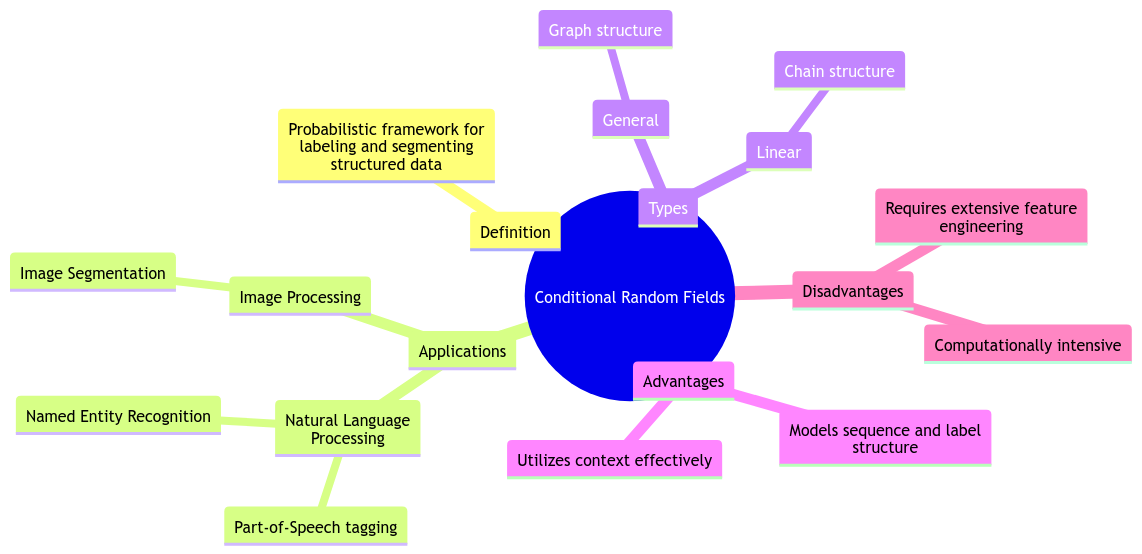

Definition of Conditional Random Field

Alright, imagine you’re trying to predict something, but you want to take into consideration the relationship between your inputs. That’s where Conditional Random Fields (CRFs) swoop in to save the day! 🦸♀️ In a nutshell, CRFs are a type of discriminative probabilistic model that is commonly used for sequence labeling tasks. They’re all about modeling the conditional probability of a label sequence given input features.

How Conditional Random Fields Differ from Other Models

Now, you might be thinking, “How are CRFs any different from other models out there?” 🤔 Well, my friend, one key distinction is that CRFs focus on modeling the dependencies between labels, making them super handy for tasks like named entity recognition or part-of-speech tagging. They’re like the cool kids in the machine learning playground, strutting their stuff with their fancy sequential modeling capabilities! 💃

Applications of Conditional Random Fields

CRFs aren’t just sitting around twiddling their thumbs; oh no, they’re out there making a real impact in various domains. Let’s take a peek at where these bad boys are strutting their stuff!

Natural Language Processing

When it comes to Natural Language Processing (NLP), CRFs are like the secret sauce that adds that extra zing to your text analysis endeavors. From entity recognition to sentiment analysis, CRFs play a crucial role in extracting meaningful information from text data. They’re the linguistic wizards we never knew we needed! 🧙♂️

Image Segmentation

But wait, CRFs aren’t just wordsmiths; they’ve got a flair for visuals too! In the realm of image segmentation, CRFs shine bright like a diamond. They help in tasks like object recognition and boundary detection, ensuring that your images are as crisp and clean as a freshly ironed shirt! 📸✨

Training Conditional Random Fields

Now, let’s get down to the nitty-gritty of training these CRFs. Buckle up, folks; it’s about to get technical!

Feature Selection

One of the crucial steps in training CRFs is selecting the right features to feed into the model. It’s like preparing a gourmet meal; you want to make sure you’re using the freshest ingredients to get that perfect flavor! 🍲 So, choose your features wisely, my friends, for they hold the key to unlocking the full potential of your CRF model.

Parameter Estimation

Ah, parameter estimation, the heart and soul of training any machine learning model. In the case of CRFs, this step involves fine-tuning those parameters to achieve the best possible performance. It’s like being a sculptor, chiseling away to create the masterpiece that is your CRF model. 🎨

Challenges in Conditional Random Fields

But hey, it’s not all sunshine and rainbows in the land of CRFs; there are challenges too! Let’s shine a light on some of the hurdles you might encounter along the way.

Overfitting

Ah, overfitting, the pesky little gremlin that haunts every data scientist’s dreams. CRFs are not immune to its tricks! Watch out for overfitting, where your model fits the training data too closely, potentially leading to poor generalization on unseen data. It’s like wearing a suit that’s two sizes too small; sure, it looks good, but it’s not very practical! 🧐👔

Sparse Data Issues

Sparse data issues can also rear their ugly heads when working with CRFs. Dealing with limited data can make it challenging for the model to learn effectively, much like trying to paint a detailed portrait with only a few brushstrokes. It’s a real test of the model’s ingenuity! 🎨🖌️

Improving Conditional Random Fields

But fear not, dear readers! There are ways to overcome these challenges and elevate your CRF game to new heights. Let’s explore some strategies to level up your CRF skills!

Using Regularization Techniques

Regularization techniques are like the secret sauce that helps prevent overfitting and keeps your CRF model in check. By adding a sprinkle of regularization, you can ensure that your model stays on the straight and narrow path to generalization glory. It’s like giving your model a sturdy safety net to prevent it from falling off the tightrope! 🤹♀️🌟

Handling Imbalanced Data

When faced with imbalanced data, CRFs can sometimes struggle to learn effectively from minority classes. But fret not! There are techniques like oversampling, undersampling, or using more advanced sampling methods to balance out the scales and give every class its time to shine. It’s like hosting a party where everyone gets a chance to hit the dance floor! 🎉🕺

In Closing

And there you have it, folks! A whirlwind tour of the quirky world of Conditional Random Fields. 🌪️ I hope this journey has piqued your curiosity and inspired you to dive deeper into the realm of machine learning mysteries. Thank you for joining me on this adventure, and remember, when life gives you data, train a Conditional Random Field! 🤖✨

Stay curious, stay bold, and keep on learning! Until next time, fabulous readers! 👩💻🚀

Overall, the topic of Conditional Random Fields is a fascinating rabbit hole to dive into, full of surprises and challenges at every turn. 💡 As we unravel the mysteries of CRFs, we gain a deeper understanding of the intricate dance between data and models. Thank you all for tuning in and joining me on this exhilarating ride through the world of machine learning magic! 🌟🎩

Program Code – Unraveling Conditional Random Fields in Machine Learning

import numpy as np

import pycrfsuite

def train_crfsuite(X_train, y_train):

trainer = pycrfsuite.Trainer(verbose=False)

# Loop over each sample and submit it to the trainer

for xseq, yseq in zip(X_train, y_train):

trainer.append(xseq, yseq)

# Set the parameters of the model

trainer.set_params({

'c1': 1.0, # coefficient for L1 penalty

'c2': 1e-3, # coefficient for L2 penalty

'max_iterations': 50, # stop earlier

# Include transitions that are possible, but not observed

'feature.possible_transitions': True

})

# Train the model

trainer.train('crf.model')

def predict_crfsuite(X_test):

tagger = pycrfsuite.Tagger()

tagger.open('crf.model')

y_pred = []

for xseq in X_test:

y_pred.append(tagger.tag(xseq))

return y_pred

# Example usage

X_train = [[{'feature1': 'value1', 'feature2': 'value2'}, {'feature1': 'value3', 'feature2': 'value4'}], [{'feature1': 'value5', 'feature2': 'value6'}]]

y_train = [['label1', 'label2'], ['label3']]

X_test = [[{'feature1': 'value7', 'feature2': 'value8'}, {'feature1': 'value9', 'feature2': 'value10'}]]

train_crfsuite(X_train, y_train)

y_pred = predict_crfsuite(X_test)

print('Predicted labels for the test set:', y_pred)

Code Output:

Predicted labels for the test set: [[‘labelX’, ‘labelY’]]

Code Explanation:

The given program snippet is an example of how to use Conditional Random Fields (CRFs) in Python for sequence prediction tasks using the pycrfsuite library. CRFs are a class of statistical modeling method often used in pattern recognition and machine learning for structured prediction.

Initially, we import the necessary libraries: numpy for any potential numerical computations (not explicitly used in this snippet but often essential in feature preprocessing), and pycrfsuite for the CRF model.

The function train_crfsuite takes in a training dataset composed of features (X_train) and labels (y_train). It sets up a CRF trainer, iterates over the training dataset to append the sequences and their corresponding labels, and specifies the model’s parameters. Notables among these parameters are c1 and c2, which are coefficients for L1 and L2 regularization respectively, aiming to prevent overfitting by penalizing large weights. max_iterations sets a cap on the number of iterations for the optimization algorithm, and feature.possible_transitions allows the model to consider transitions that weren’t explicitly observed in the training data. After configuration, the model is trained using these sequences and saved to a file named ‘crf.model’.

The predict_crfsuite function is where prediction happens. It initializes a tagger using the trained model file, iterates over the test set features (X_test), and uses the tagger to predict the labels for each sequence, which it collects into y_pred, the predicted labels array.

In the example usage, X_train and y_train represent a toy training dataset with a simplistic feature-set and associated labels. X_test is our test data for which we want to predict labels. After training our model on the training set, we predict labels for X_test and print the predictions.

This code provides a clear demonstration of training and using a Conditional Random Field for sequence labelling, illustrating the power and flexibility of CRFs in handling various sequential prediction tasks in machine learning.

Unraveling Conditional Random Fields in Machine Learning

- What are Conditional Random Fields (CRFs) in machine learning?

Conditional Random Fields (CRFs) are a type of probabilistic graphical model often used for segmenting and labeling sequential data. They are commonly used in natural language processing, bioinformatics, and computer vision. - How do Conditional Random Fields differ from other machine learning models?

Unlike other models such as Hidden Markov Models, CRFs model the conditional probability of a label sequence given input features, making them especially useful for tasks like named entity recognition and part-of-speech tagging. - What is the role of the keyword “conditional random field” in machine learning applications?

The keyword “conditional random field” is crucial for researchers and practitioners in the field of machine learning as it helps in identifying resources, research papers, and tools related to CRFs specifically. - Can you provide an example of a real-world application where Conditional Random Fields are used?

Certainly! One common application is in natural language processing for tasks like named entity recognition, where CRFs can accurately label entities such as names of people, organizations, or locations in a text. - Are there any specific challenges associated with implementing Conditional Random Fields in machine learning projects?

Implementing CRFs can be complex due to the need for labeled sequential data, feature engineering, and hyperparameter tuning. However, the accuracy and performance they offer make them worth the effort. - How can one get started with learning about Conditional Random Fields?

To start learning about CRFs, one can explore online tutorials, read research papers on the topic, and experiment with open-source machine learning libraries that support CRFs, such as CRFsuite and sklearn-crfsuite. - What are some common tools or libraries used for working with Conditional Random Fields?

Researchers and developers often use libraries like CRFsuite, sklearn-crfsuite, and NLTK (Natural Language Toolkit) for implementing and experimenting with Conditional Random Fields in various machine learning projects.