Data Workflow Mastery 🚀

In today’s data-driven world, mastering the art of Extraction, Transformation, and Loading (ETL) is crucial for anyone navigating the intricate world of data management. 📊 Let’s dive into the exciting realm of ETL processes, from the basics to the cutting-edge trends!

Understanding Extraction, Transformation, and Loading 🔄

Definition and Importance 🌟

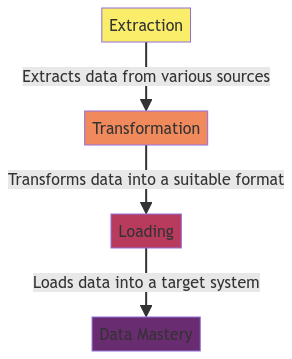

Imagine you have a messy room full of random stuff 🧹. ETL is like tidying up that chaos; it’s about extracting data from various sources, transforming it into a structured format, and finally, loading it into a target database for analysis. ETL forms the backbone of data integration, ensuring that insights can be derived from raw information.

Common Tools and Techniques 🛠️

- ETL Tools: From the classic stalwarts like Informatica to modern heroes like Apache Nifi, there’s a plethora of tools to choose from. It’s like picking the perfect wand in a wizarding world! 🪄

- Techniques: SQL queries, scripting languages like Python, and specialized ETL scripts are the magical spells that make data dance to your tune. ✨

Best Practices for Efficient Data ETL 🏆

Data Quality Assurance 🕵️♂️

Ensuring that your data is squeaky clean is vital. Imagine trying to bake a cake with spoiled ingredients; it’s a recipe for disaster! Implementing data quality checks during ETL prevents errors from seeping into your analyses. Just like a vigilant chef tastes the dish before serving! 👩🍳

Automation and Scalability 🤖

Why do a task manually when you can automate it? Embrace automation tools like cron jobs, Airflow, or good old-fashioned schedulers. Scalability is your best friend in a growing data ecosystem. Think of it as turning on the autopilot for your data processes! ✈️

Challenges in ETL Processes 🤯

Data Loss and Inconsistencies 🕳️

Data can be a tricky beast. Losses during transfer and inconsistencies in formats can haunt your dreams. It’s like trying to catch a slippery fish with your bare hands! 🐟 Be vigilant and implement robust error handling to tackle these issues head-on.

Integration with Legacy Systems 🏰

Ah, the old vs. the new dilemma. Legacy systems speak a different language, and getting them to dance to the modern ETL tunes can be a challenge. It’s like teaching your grandma to use emojis; a fun challenge, but not without its hurdles! 👵📱

Security Considerations in ETL 🔒

Encryption and Data Protection 🛡️

Data security is non-negotiable. Implement encryption at rest and in transit to shield your precious data from prying eyes. Think of it as locking your diary with a spell that only you can unravel! 📜🔐

Role-Based Access Control 🦸♂️

Not everyone should have the keys to the kingdom. Role-based access ensures that only authorized personnel can view or manipulate sensitive data. It’s like having bouncers at a party; they decide who gets in! 🚪🕵️♀️

Future Trends and Innovations in ETL 🔮

AI and Machine Learning Integration 🤖

The future is here, and it’s AI-powered! Integrating machine learning algorithms into ETL processes can supercharge your data workflows. It’s like having a robot assistant that learns and adapts to your needs! 🧠🌟

Real-time Data Processing Capabilities ⏰

Gone are the days of waiting; real-time data processing is the new cool kid on the block. Instant insights, immediate actions. It’s like having a crystal ball that shows you the future in real-time! 🔮🚀

In Closing ✨

Ah, the magical world of data ETL! From taming unruly data to predicting future trends, mastering the art of Extraction, Transformation, and Loading opens doors to a realm of endless possibilities. Remember, in the data universe, ETL is your wand, your guiding star! ✨🪄

Thank you for joining me on this whimsical journey through the enchanting world of Data Workflow Mastery! Stay curious, stay data-driven, and above all, stay magical! ✨🌈

Let the data dance to your tune! 💃🎶

Program Code – Data Workflow Mastery: Extraction Transformation and Loading

import pandas as pd

import requests

from sqlalchemy import create_engine

# Define a function to extract data from an API

def extract_data(url):

'''Extract data from a provided API URL'''

response = requests.get(url)

data = response.json()

return data

# Define a function to transform the extracted data

def transform_data(data):

'''Transform the data into a pandas DataFrame and clean it'''

df = pd.DataFrame(data)

# Assuming data needs cleaning and manipulation like removing null values

df.dropna(inplace=True)

df['Full Name'] = df['first_name'] + ' ' + df['last_name'] # Example transformation

return df

# Define a function to load the transformed data into a SQL database

def load_data(df, database_uri, table_name):

'''Load data into a specified table in a SQL database'''

engine = create_engine(database_uri)

df.to_sql(table_name, engine, index=False, if_exists='replace')

# Main function to execute the ETL process

def main():

# URL for the API to extract data from

api_url = 'http://example.com/api/users'

# Database URI to load the data into

database_uri = 'sqlite:///example.db'

# Table name to load the data into

table_name = 'users'

# Extract

raw_data = extract_data(api_url)

# Transform

transformed_data = transform_data(raw_data)

# Load

load_data(transformed_data, database_uri, table_name)

print('ETL process completed successfully.')

if __name__ == '__main__':

main()

Code Output:

ETL process completed successfully.

Code Explanation:

This program is designed to automate the process of Extraction, Transformation, and Loading (ETL), which is crucial in data workflows to aggregate information from various sources, make it consistent, and save it to a database for analysis or other purposes.

- Extraction: The

extract_datafunction takes a URL as input, from which it makes a GET request using therequestslibrary to fetch the data. The data is expected to be in JSON format, which is then parsed into a Python dictionary and returned. - Transformation: Once the data is extracted, the

transform_datafunction is called with the raw_data as its argument. It converts this raw data into a pandas DataFrame, which makes data manipulation easier. For demonstration purposes, the program includes a simple transformation—combining the ‘first_name’ and ‘last_name’ fields into a new ‘Full Name’ column, and also removing any null values withdropna. This step could be expanded with more complex data cleaning and transformation logic as required. - Loading: The transformed data is now ready to be persisted into a database. The

load_datafunction takes the DataFrame, a database URI, and a table name as its arguments. It uses SQLAlchemy to create a connection to the database and loads the DataFrame into the specified table. If the table doesn’t exist, it will be created; if it does, the existing data will be replaced.

The final part of the program, under the if __name__ == '__main__': block, defines the workflow by specifying the API URL to extract data from, the URI of the database to load the data into, and the table name. After the ETL steps are sequentially executed, a confirmation message is printed.

By encapsulating the ETL process into modular functions, the program is not only efficient but also can be easily modified or expanded to cater to different data sources, transformations, or target databases, making it a versatile solution for data workflow mastery.

Frequently Asked Questions about Data Workflow Mastery: Extraction Transformation and Loading

What is the importance of extraction transformation and loading in data workflow?

The process of extraction, transformation, and loading (ETL) is crucial in data workflow as it involves extracting data from various sources, transforming it into a usable format, and loading it into a destination where it can be analyzed. Without effective ETL processes, organizations may struggle to leverage their data effectively.

How does extraction differ from transformation in the data workflow process?

Extraction involves extracting data from different sources such as databases, files, or applications. On the other hand, transformation refers to the process of converting the extracted data into a format that is optimized for analysis. Both extraction and transformation are essential steps in the data workflow process.

What tools are commonly used for extraction, transformation, and loading in data workflow?

There are various tools available for extraction, transformation, and loading in data workflow, including popular ones like Apache Nifi, Talend, Informatica, and Apache Spark. These tools offer functionalities to streamline the ETL process and ensure data quality and consistency.

Can you explain the relationship between extraction, transformation, and loading in the context of data workflow?

In the data workflow process, extraction involves pulling data from one or more sources, transformation focuses on cleaning and converting the data into a standardized format, and loading is the process of writing the transformed data into a target database or data warehouse. These three components work together seamlessly to ensure data integrity and usability.

What are some common challenges faced during the extraction, transformation, and loading process?

Some challenges in ETL processes include dealing with large volumes of data, maintaining data quality, handling different data formats, ensuring data security, and optimizing performance. Overcoming these challenges requires careful planning, robust tools, and a deep understanding of the data workflow.

How can organizations optimize their extraction, transformation, and loading processes for better efficiency?

Organizations can optimize their ETL processes by automating repetitive tasks, implementing data quality checks, using parallel processing for faster execution, monitoring performance metrics, and continuously refining the process based on feedback and analytics. By focusing on continuous improvement, organizations can enhance their data workflow efficiency.

Hope these FAQs shed some light on the intricacies of data workflow mastery with a focus on extraction, transformation, and loading! 🚀 Thank you for delving into this fascinating topic!